I am currently studying for a crucial exam. And while doing so, my primal technique remains to do practice, and solve as many problems, assignments and former exams as possible. Because this is the best way to learn for exams (it’s for me, but arguably for everybody). With the great quantity of input data, my brain recognizes and learns certain patterns, problem types, usual assignment requirements and helps me develop an understanding of the subject without knowing something specific by heart, but rather adapting my understanding to a related context which allows me to generate task-specific results, the more data, the more practice, the better, accurate and rapid responses.

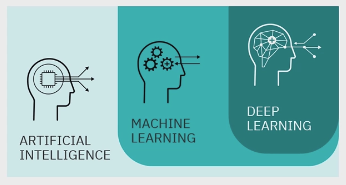

I might sound a little technical or even awkward, but with that simple example I want to introduce our todays topic: Machine Learning, Deep Learning, and Neural Networks.

This is in fact a learning process of our brain. Scientists apparently recognized this and aimed to replicate it in machines. They wanted machines to learn like us.

Machine Learning

Machine Learning is a subfield of AI and uses algorithms to analyze data. Yet don’t confute these algorithms with casual “if, else” algorithms where the machine gets instructions. Instead it makes decisions without explicit programming and enables autonomous problem solving.

So machines, like in our exam-preparation-example, learn from data, from massive quantity of data, and so they learn from examples and not rules. This enables them to solve problems and make decisions independently.

So lets take an example. Lets say we have a medical machine that predicts heart failures. To train our machine we input data—data like bmp, bmi, age and sex of a patient and whether their heart was failed or not. After a while our machine will be capable of predicting possible heart failures from various parameters. The more data it analyses, the more accurate the prediction becomes. This is a simple example of machine learning.

There are 3 types of Machine Learning:

1. Supervised Learning

Supervised learning uses labeled data for training, enabling models to label, predict, or classify information. It is ideal for general-purpose model training, where rapid and straightforward answers are required without much reasoning.

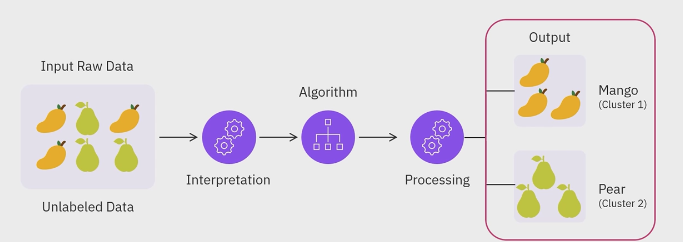

2. Unsupervised Learning

In this type of machine learning the training data is unlabeled, meaning that the model finds patterns from unstructured data on its own.

Source: IBM Skills Network

3. Reinforcement Learning

It is an award-punish mechanism that encourages model to maximize the award. It is useful for tasks like playing chess or navigating, where you need good reasoning.

This is in fact the reason why DeepSeek’s R1 is so good at math, chess, logic or generally at reasoning problems, because it was trained with reinforcement learning whereas most of the other freely available generative models like GPT-4 were trained with supervised learning.

Deep Learning & Neural Networks

Source: IBM Skills Network

Deep learning is the specialized subset of Machine Learning and is the training process of layering algorithms to create a neural network.

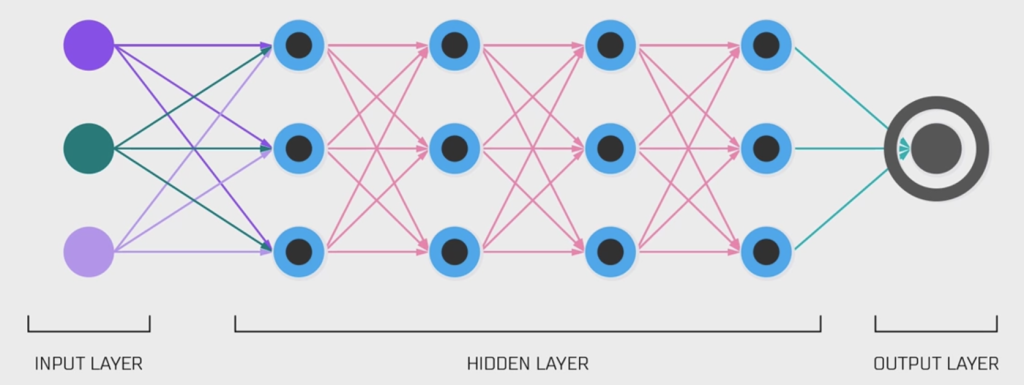

Neural networks are artificial replications of the brain structure. Neural Networks consist of 3 main parts: input layer, hidden layers, and output layer.

Input layer receives the data and passes it on to the hidden layers. Hidden layers process the data and finally the output layer produces the output.

Source: IBM Skills Network

This structure was inspired by the structure of our brain. The quantity of the (hidden) layers is the reason why it is called “deep” learning. The more hidden layers, the deeper the learning and the better the output.

The Training Process

Source: IBM Skills Network

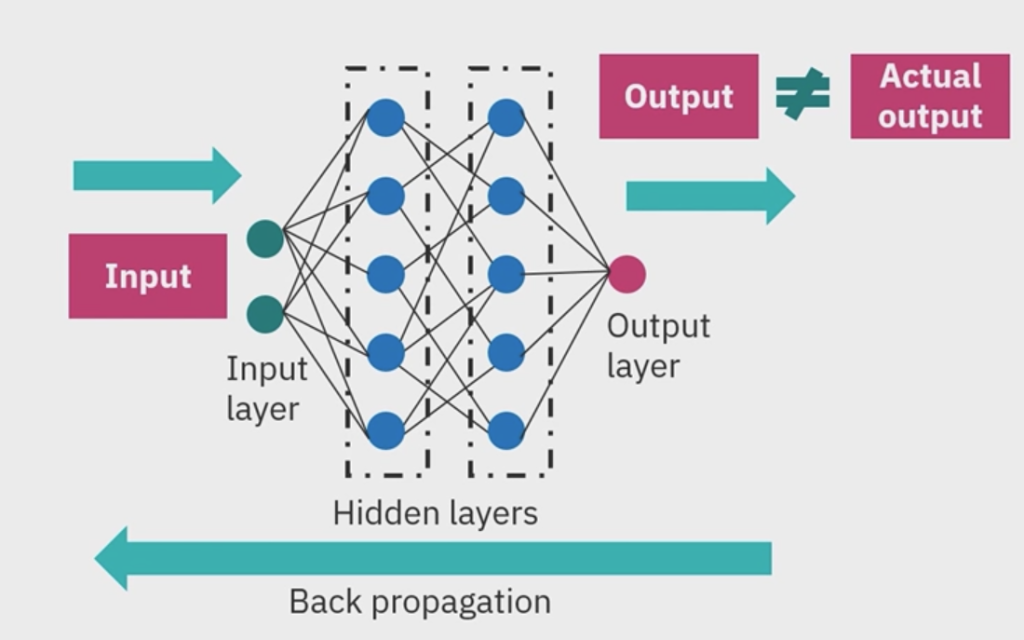

The training of a neural network can be broke down into three main steps.

1. Forward pass/ forward propagation

First of all, the data passes through the neural network layers and lets it (NN) compute and output.

2. Calculation of the Error

The tester already knows the actual result. In this second step, the error or loss is determined by calculating the difference between the generated output and the actual output.

3. Backward pass/ back propagation

After the error is defined, it is now set back through the network and the internal parameters are adjusted to reduce future errors and optimize the outputs.

This process is repeated until the predictions are accurate and the error is minimal.

All in all, just as we have a learning process for our intelligence, machines have theirs too. They are not just instructed to perform tasks but are taught through examples and algorithms—this is called Machine Learning. There are three main ML techniques: Supervised, Unsupervised, and Reinforcement Learning. Deep Learning is a specialized subfield of Machine Learning where models are trained using neural networks, mimicking the human brain.